はじめに

TIG 真野です。Terraform連載2025の2日目です。

Pikeを触ってみた記事です。

Pikeとは

Pike は James Woolfendenさんによって開発されたTerraformのコードを静的解析し、その terraform apply に必要な最小権限の原則に則ったIAMポリシーを生成するツールです。直接 .tf のコードをスキャンするというところが、良さそうと思ったポイントです。

Terraformを用いてインフラ構築する際には、強めの権限(本来は不要であるサービスの作成権限など)を付与して行うことが多いと思います。そのため、万が一のセキュリティ事故や誤操作で思いがけない結果に繋がる懸念がありました。しかし、最小権限の原則を忠実に守ろうとすると難易度・対応コストが高くなるため、ある程度割り切った運用を採用することが多いように思えます(もちろん、開発時は大きめを許容するが、サービスがある程度枯れてきたら権限範囲を小さくするといった運用は今までもよく行われてきたと思います)。

個人的にも、terraform apply に必要な権限を、インフラのリソース種別が追加する事に調査・ポリシーに追加する運用な流石に厳しいなと感じていたので、こうした支援ツールが福音に聞こえました。

さっそく触ってみます。

インストール

README に環境別の手順があります。私はGo言語環境があったので、以下でインストールします。

$ go install github.com/jameswoolfenden/pike@v0.3.47 |

pike経由で直接、IAMポリシーを直接AWS上にデプロイできるなど多くのコマンドがありますが、今回は scan だけ用います。

対象リソースの準備

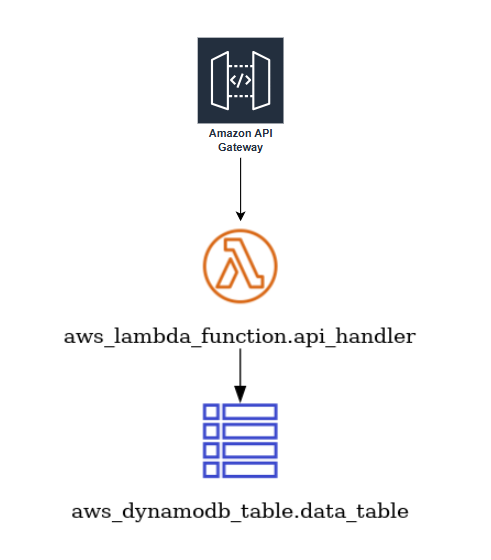

以下のような API Gateway + Lambda + DynamoDB(図にはないですがCloudWatchメトリクスやアラーム)を含んだリソースを持つTerraformコードを用意します。なお、図はinframapで生成したものを簡単に加筆したものです。

main.tf

terraform { |

Pikeの実行

同一ディレクトリ上で、pikeコマンドを実行します。応答速度は一瞬でした。早い。

$ pike scan -output json |

権限の概要は以下です。

| サービス | アクセスレベル | リソース | リクエストの条件 |

|---|---|---|---|

| API Gateway | フル: 読み取り, 書き込み | すべてのリソース | None |

| API Gateway V2 | フルアクセス | すべてのリソース | None |

| CloudWatch | 制限あり: リスト, 読み取り, 書き込み | すべてのリソース | None |

| DynamoDB | 制限あり: 読み取り, 書き込み | すべてのリソース | None |

| EC2 | 制限あり: リスト | すべてのリソース | None |

| IAM | 制限あり: リスト, 許可の管理, 読み取り, 書き込み | すべてのリソース | None |

| Lambda | 制限あり: リスト, 許可の管理, 読み取り, 書き込み | すべてのリソース | None |

一見すると大きな分類レベルの違和感がないものの、細かい点が気になります。Pikeとしては基本的にリソースは全て * になるようです。

早速、このJSONを用いてIAMポリシーを作成 ⇛ それをアタッチしたIAMロール作成 ⇛ スイッチロールして利用 ⇛ terraform apply します。

$ terraform apply |

まさかの一発成功でした🎉🎉🎉

本当に最小権限になっているか?権限を外してみる

作成するLambdaはVPC外で動作する定義になっています。そのため以下のEC2のネットワークインターフェースの権限は不要に見えましたので、外してみます。

以下をポリシーから削除。

ec2:DescribeAccountAttributesec2:DescribeNetworkInterfaces

一度、terraform destory してから再実行すると、やはり成功します。

$ terraform apply |

完全に厳格なツールという訳では無いようです。

Pikeには絶対に無視されるであろうリソースを追加してみる

以下のように、local-exec 経由でS3バケットを作成してみるコードを追加します。

# ...中略 ... locals { bucket_name = "pike-${random_string.bucket_suffix.result}" } resource "terraform_data" "s3_bucket_via_cli" { input = { bucket_name = local.bucket_name } provisioner "local-exec" { command = <<EOT aws s3api create-bucket \ --bucket ${self.input.bucket_name} \ --create-bucket-configuration LocationConstraint=ap-northeast-1 EOT interpreter = ["bash", "-c"] on_failure = fail } provisioner "local-exec" { when = destroy command = <<EOT aws s3api delete-bucket \ --bucket ${self.input.bucket_name} EOT interpreter = ["bash", "-c"] } } output "bucket_name_created_via_local_exec" { description = "Name of the S3 bucket presumably created via local-exec" value = local.bucket_name } |

pikeコマンドを実行しますが、先ほどと差分は無しです(local-exec の実行コマンドをパースするわけがないので当たり前ですが)。

そのため、当たり前ですが terraform apply はS3実行権限が不足しているため失敗します。

$ terraform apply |

使ってみての所感

簡易的な構成であれば、割と成功確立が高い可能性のかもしれない、と好意的に印象を持ちました。

しかし、Terraformの便利ツールを使ってみよう(第一回)〜Pike編〜 のような構成だと、何度か手動で権限を追加してやっと成功した、といった例もあるので、まずは自分たちの構成で素振りすると良いかもしれません(別のリージョンなどでapplyができるかといった具合でしょうか)。

まず、Pikeを使うようなモチベーションがあるという時点で、それなりの規模だと思うのでおそらく手動でメンテナンスが必要になってくるんだろうなという印象です。

他にもいくつか感想です。

- 当たり前ですが、 local-exec などを経由して作成するリソースについては対応していません

- 今回は試していませんが、 Terraformモジュール 経由もちゃんとスキャンするようなログが出ていました

- 基本的にはリソースタイプに対するアクション(

ec2:RunInstancesなど)に基づいていて、リソース名を制限したより細かい粒度では生成しないようです。これは予め理解して利用すると良いかなと思います(まぁ、これは許容しても良い気がします) - Terraform実行に必要な権限の一覧が出ると、どのようなサービスを利用しているかざっと分かるので、キャッチアップには良いかもなとは思いました(これは構成図をちゃんとメンテナンスした方が良いとは思いますが)

さいごに

Pikeを使ってみました。シンプルなTerraformコードでも微妙に不要な権限がついてたりしましたが、概ね想定通りの権限のみのポリシーを生成してくれました。

構成によっては、手動で追記しないと動かないというパターンもあるようなので、完全な自動化をめざすというよりは、手動で修正・レビューを含めたワークフローを作る必要があると思います。

実行速度はかなり高速で、インストールも簡単なので、まずはTerraformディレクトリで実行してみてお試ししやすいツールだと思いました。